Is "Wrestling" Fixed?

The report "Wrestling with social and behavioral genomics" has good points, but risks being interpreted so as to bias scientific research. In response, I propose a Principle of Symmetry.

Excuse the hiatus. I was nerd-sniped by a little software project, of which more below. Then I was at ESSGN Bologna. It was great. A few highlights:

Getting schooled by a first year PhD student on how to correct for measurement error in estimating the effect of natural selection on polygenic scores. I updated my original post, and wrote this primer for idiots (such as myself) as a penance.

Watching Paige Harden present. This was interesting and funny. Her affect is very much to build bridges with the humanities. There were quotes from Bruno Latour and comments about the “work of policing boundaries”. Everyone was enthusiastic and inspired… so much, they barely seemed to notice that the work presented was, get this, a high-powered Genome-Wide Association Study to predict being a criminal. It was like watching a street magic show, then feeling for your wallet and finding it has been replaced with a live hand grenade.

Still much paranoia about the field’s survival. One US professor complained about the woke opposition in his department trying to cancel him. Then he said “oh, I’m off to a conference in the UK.” Really, what? “National conservatism.” Pause as I tried to guess just how “national” national conservatism was. (Judge for yourself.)

Staying on the genetics theme: a new report, Wrestling with Social and Behavioral Genomics, has come out about when it is OK to investigate the genetics of sensitive characteristics and group differences. I’ve written on this topic, so I was interested to read it.

Overall, I think the report’s publication is a good sign. But it itself is a mixed bag. I won’t spare on the critique, because my background view is that many funders/researchers/gatekeepers in the field are doing something unethical (holding back research on topics of vital human concern), whilst mistakenly believing they are doing something ethical (preventing fascists from finding an intellectual justification). In those situations, you have to be loud and clear, so as to challenge people’s certainties.

As before, trigger warning: this is a sensitive topic. Also, marathon warning: it’s a very long post. I considered splitting it up, but I think its arguments belong together. Also, nerd warning: this is partly aimed at researchers, so it gets technical in places.

WWE All Stars

First, it’s good that these guys published this report. The authors include names seriously respected within the field (inter alia Dan Benjamin, Patrick Turley, Ben Neale, Eric Turkheimer, and indeed Paige Harden). Their views carry weight and they have expertise. And it’s good to discuss “what to do about controversial genetic research” openly. That is much better than making these decisions in the dark, with people nervously readjusting their ties. Open arguments can be challenged, which is the essence of both science and liberalism.

The authors — it’s a report of a working group — disagreed amongst themselves. Also good. When Very Important People are all unanimous, well, maybe they are right, but it will be very hard to go against them if they are wrong, especially in a close-knit and quite centralized field like behaviour genetics. Open disagreement is a sign that debate is possible and consensus is not frozen.

There’s a lot of knowledge, wisdom and good sense in the report, too. Specifically:

There is an expressed commitment to the inherent value of knowledge, and to the importance of free enquiry. This is not a woke whitewash: the authors really are wrestling with difficult and complex issues.

There’s a thoughtful discussion of the scientific and medical value of predictions and correlations, over and above the value of causal knowledge.

The authors point out the ethical issue that doing population-specific genetics can disproportionately benefit people of European genetic ancestries, and not others — here called “white person medicine”.

There is plenty to learn, including a brief history of early genetic research and eugenics, and an insightful introduction to the tools of modern genomics.

Many of the suggestions about how to present one’s research are sensible and useful.

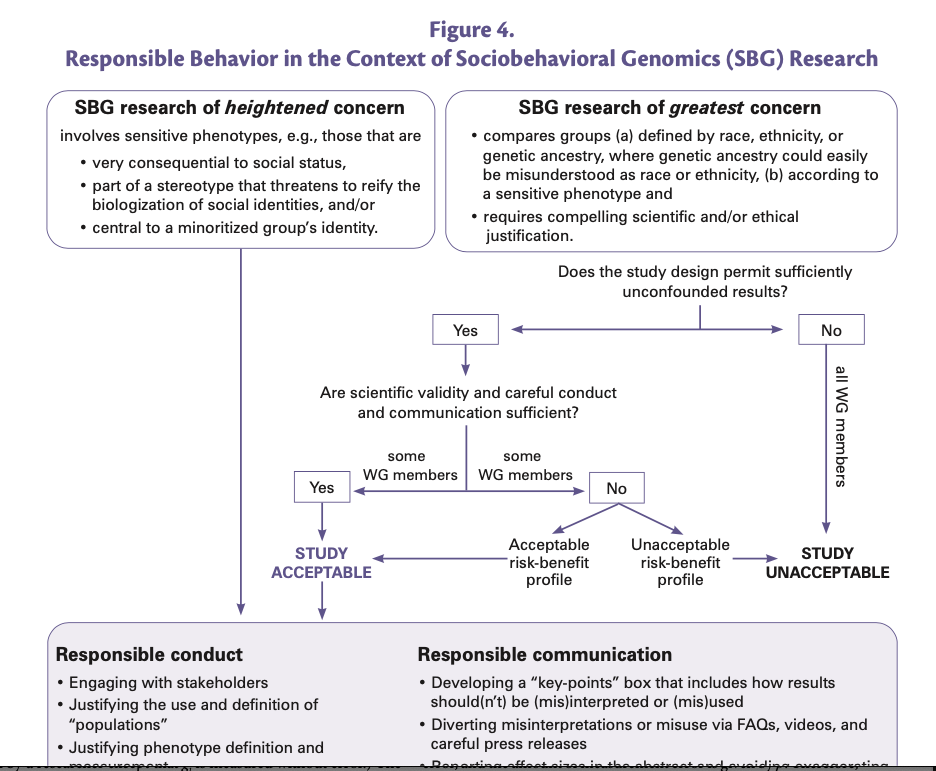

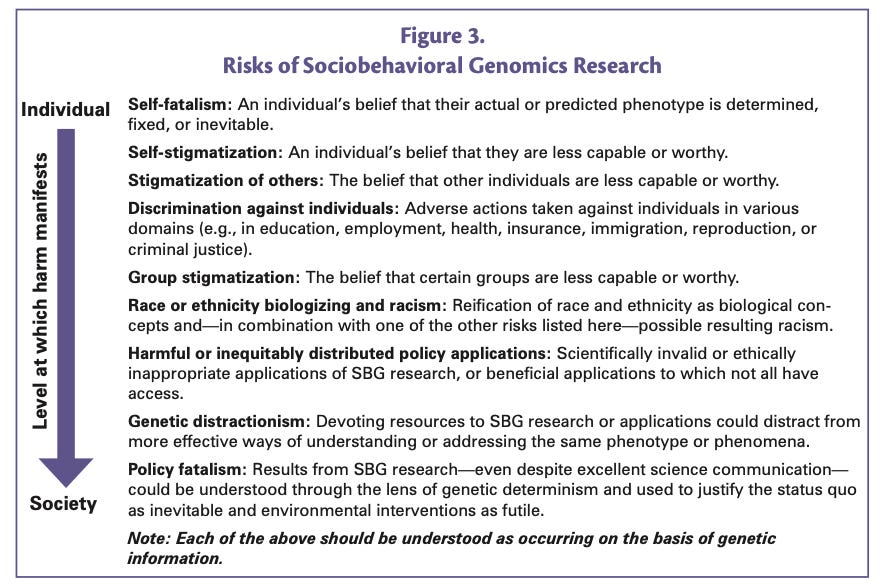

Lastly, this picture sums up the report’s basic recommendations:

I think if you take the more “liberal” set of working group members, who think it’s enough to do scientifically valid research and careful communication, then… basically this might be fine? Because one interpretation of what it says is simply “don’t do bad, confounded research”.1 And since bad research indeed should not be done, and avoiding it is a key part of all science, essentially this report then is recommending that we should treat SBG research like all other scientific research. That is roughly what I believe, and implementing it in practice would be a large step forward for the field.

That said, I don’t think this is how most readers are likely to interpret the report. Instead, I think it will get interpreted as a warning to not do certain kinds of research. That is a very dangerous route to go down, and it requires a very high standard of proof. So now I’ll give some criticisms.

Are you ready to rumble

… in America wrestling represents a sort of mythological fight between Good and Evil (of a quasi-political nature…)

Roland Barthes, “The world of wrestling”

As I said, the authors are high-powered and influential; I wish the report showed more awareness of the risks of that. It includes a history of early genetics and eugenics. That early science was eventually implicated in the large-scale human rights abuses of the Nazi regime, as well as abuses in the US and elsewhere. It’s quite right to think about that in this context.

However, it’s also important to recall the broader context of twentieth-century science and its relationship with political regimes. Here is a table which I find helpful:

That is greatly simplified and perhaps a bit rhetorical — liberal democratic science did do some bad things, as the report makes clear — but it does capture the reality that any ideology can become dangerous when politics makes it immune to criticism. (Communist China, by the way, still has a eugenics movement, and it may be implicated in the persecution of Uighurs.)

US science is not at high risk of perpetuating human rights abuses, but it may be at risk from increasing political interference in, perhaps even of centralized control over, the scientific process.

In this context, it’s relevant that all the authors are at US institutions…

… and that US academia is in, uh, a “special” place right now. Which relates to my little nerd-sniped software project, when I tried to compare the share of Democrats in US academia to the share in the population:

No one expects scientists to be apolitical, but US universities have all the viewpoint diversity of Branch Davidian. That matters, because one of the background issues here is that right-wing extremists have an interest in genetic research. While we may disagree on how to get there, I think we can all agree on the goal that Nazis should not be encouraged by our scientific work to go on killing sprees. But the report shows an unfortunate slippage between this goal, and a broader, less-defined and more controversial one, of opposing political conservatism more generally. For instance:

… there is reason to fear that, despite the best intentions of the researchers in our working group, results from SBG research will be used to reinforce the status quo. (S19)

Elsewhere, there is loose vocabulary like “resisting regressive political actors” (S17).

The context here is the development of polygenic indices/scores (PGIs) for educational attainment, which can be used to predict how much education different people will end up acquiring, and which capture (some!) genetic variants that actually cause that variation. At this point, the context is not specifically about intergroup differences, just about the use of PGIs more generally.

What would an argument look like which used PGIs for educational attainment to reinforce the status quo? Something like this, maybe:

“Educational outcomes between rich and poor are very unequal in this (country/city/region). You say that this shows the education system is unjust, and that we need to change it by (increasing school budgets in poor areas/moving away from standardized testing that reflects socioeconomic advantage/providing scholarships aimed at poor students). I disagree. If you control for PGIs for educational attainment, the differences are substantially reduced, and I bet that if we had better PGIs, they would be reduced further. There’s no social injustice here, just outcomes which reflect individual ability, and your proposed changes will fail, or even be counterproductive.”

You may agree or disagree with this argument, perhaps depending on the details of the status quo, the proposed policy change, and how exactly one parses “social injustice”. Scientists are absolutely within their rights to do that, and to contribute to that debate, for example by giving their expert opinions on the meaning and limits of polygenic scores. But it would absolutely not be legitimate to use that debate as a reason to prevent or defund further research on polygenic scores on educational attainment.

In that sense, it’s not reassuring that, as they put it:

all of the working-group members… shared at least one fundamental political view: that our society is afflicted by intolerable discrimination and inequality…. None of us agrees with those who appeal to genetics as a justification for the status quo or as an excuse for not intervening to mitigate the social injustices that plague our society (S7).

Again, they are absolutely in their rights to think so, and to act on it as citizens, individually and collectively. They can also do scientific work to support that view: lots of social scientists are motivated by their political concerns, and that is fine, so long as the resulting work is good science.

But here is where the political narrowness of US academia bites: not everyone agrees with them. Not everyone thinks that the richest and freest society in the world is afflicted by literally intolerable inequality and discrimination. People prioritize a wide range of political goals. Some want to preserve a free economy. Others care about protecting the environment, increasing long-run growth at any cost, naturalizing one billion Americans, preventing AI takeover, or ending legal abortion. Other people just want to grill: rather than dedicating their lives to the removal of an intolerable evil, they work as bar attendants, rodeo clowns or even geneticists.

All of these different groups are entitled to pursue their political goals, including by doing science. None of them should pursue them by preventing science being done. In this context, the fact that the working group all agreed with one view is a danger sign.

(PS. I checked Twitter and found that the Report authors were aware that they might not be hugely diverse on various dimensions, including political views. To fix that, they recruited a Community Sounding Board. How well did that work out? Oh, I mean, guess:

Bless them for trying.)

The referee’s got him in a chokehold!

This leads to the key problem with the recommendation, endorsed by the whole group, to only publish work on topics of greatest concern which is “sufficiently unconfounded”. Their wording is strong and clear:

“We all recommend that, absent the relevant compelling justification(s)… researchers not conduct, funders not fund, and journals not publish research on sensitive phenotypes that compares groups defined by race, ethnicity, or genetic ancestry, where genetic ancestry could easily be misunderstood as race or ethnicity.”

The relevant compelling justification which the whole group agrees on is sufficient scientific validity2, and the problem is that there’s an ambiguity. Is genetic research comparing groups going to be held to the same standard of scientific validity as other research, or a higher standard?

This matters because scientific validity is not black and white. No real-world research is perfect, it all has flaws and can be critiqued. Who knows this better than geneticists? They have used twin studies, adoption studies, twins reared apart, and the new tools of GWAS and polygenic scores, to support the basic thesis that genetics matters, and after fifty years some people still aren’t convinced! Evaluation of research can be very subjective. Also, the history of science is complex: a flawed research design can kickstart an important discovery. Even the Eddington expedition to test relativity made some controversial data cleaning decisions.

If the argument is that genetic research should have the same threshold for publication as other research, then sure, who would disagree? Nobody wants shoddy work published.

But I suspect many people, including journal editors and funders, will read the report as recommending that genetic research needs to reach an especially high standard of quality before being published. The authors certainly argue that the issues are highly sensitive, and they lay out a set of risks from mistaken results, which they imply are unique to genetic research.

Publication thresholds are not uniform. Particle physics has higher standards of statistical significance than economics. In some fields an R-squared of 50%3 would be ordinary. In others, 10% is a success. But these variations are across different research topics. It’s not usual to require different thresholds of validity for different explanations of the same thing — say, for genetic versus environmental causation of some kind of differences. Why not is obvious: if you do so, you will bias the science. The published literature will be full of one side of the story. The other side, like a wrestler facing a partial referee, won’t get a look in.

The report lays out a set of risks of bad or misinterpreted genetic research. Are these enough to defeat the normal presumption of equal treatment for different explanations?

This list has problems. It is unweighted, it is one-sided, and it is evidence-free.

Trash talk

The list mixes two kinds of risks. One is stigmatization: people holding bad beliefs about individuals or groups, including perhaps themselves. The other is bad research and its results: harmful policy applications and genetic distractionism. In many cases, the costs of these are likely to be drastically different. To think about this, it might help to consider a specific example of an intergroup difference.

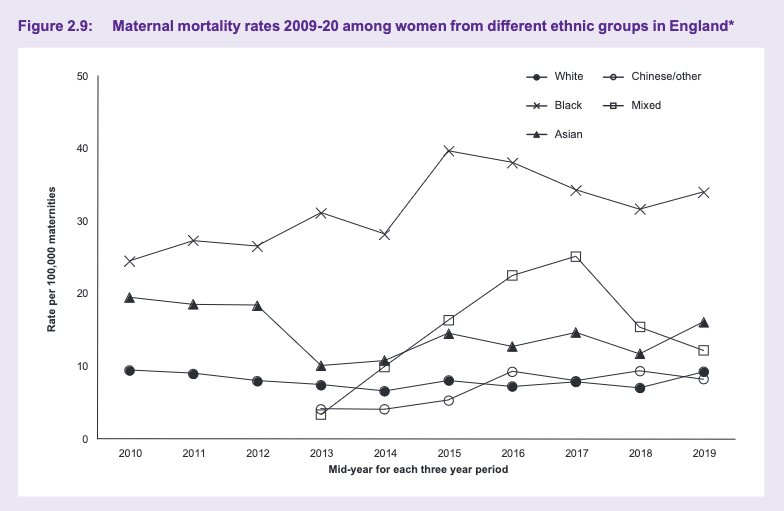

Rates of death in or around childbirth differ substantially by ethnic group, in both the US and the UK.

It is not hard to think of potential environmental explanations for this difference. There are macro-environmental differences, like differences in maternal age, the places where people from different ethnic groups live, or differences in income and poverty. There could also be “micro-environmental” differences. For instance, people from similar backgrounds but differing only by ethnic group might receive different treatment in hospital. It is also possible that genetic differences could play a role. Childbirth is obviously a biological process — as well as a social and cultural event — and at least some differences between groups are partly under genetic control.

Death in childbirth is one of the most terrible things that can happen to anyone. It is a deep unfairness that some people should be more at risk of this awful tragedy. It is a kind of primal unfairness: I do not think it would be less unfair if it turned out to have genetic roots. It is also something that society ought to address, again, whether or not its causes are genetic.

As an expectant parent of one of these ethnic minorities, you face two kinds of risks from research on this topic. The first is that the results of the research might stigmatize you. For example, if the research finds that indeed twin births play into elevated perinatal death risk, and that intergroup differences in the probability of twin birth are partly caused by genetics, then maybe someone will go around saying “oh, ethnic group X breeds like rabbits”.4

The second risk is that bad research is done, or good research is not done. It fails to uncover the true mechanisms behind these differences. Appropriate treatments or policy responses are not developed. As a result, you die in childbirth.

Without discounting the first risk, I hope we can agree that the second risk is of an entirely different order. But the report does not consider any such weighting.

That is partly because the list above is also one-sided. It fails to consider the other side of the story: the risks of not doing good research on genetic causes of group differences, and/or of biasing science towards environmental causes. Just as “genetic distractionism” might lead us away from useful environmental interventions, “environmental distractionism” might lead us away from useful interventions based on understanding genetic causes. (Direct genetic interventions are not really feasible today, and raise their own ethical issues, but they aren’t why most researchers study genetics.)

Lastly, the list of risks is evidence-free. How serious are the risks of stigmatization and self-stigmatization? How likely is genetic research to contribute to them? This part of the report is a footnote-free zone, with no references to empirical work on these topics. That’s a pretty surprising omission, given that elsewhere, it puts so much emphasis on demanding high standards of proof. (They could have cited the literature on stereotype threat. That research isn’t problem-free, but it would have been better than nothing.)

The authors might argue that my example is unfair; maternal mortality is a medical characteristic, while they are focused on social and behavioural characteristics. But this is a nearly meaningless distinction: very many medical issues, including childbirth, have a social or behavioural aspect. As the report states, it is “difficult to draw a line between medical and SBG phenotypes.”

In any case, the report clearly takes an expansive view of the research it covers:

Schizophrenia is a sensitive phenotype, and so a study attempting to compare the prevalence of schizophrenia in groups defined by race, ethnicity, or genetic ancestries that can easily be mistaken for races or ethnicities should be justified before it is conducted, funded, or published.

This is the justification, recall, that requires very high standards, which cannot be met today, and which some authors believe will never be met.

That is an astonishing claim.

I know the danger of using personal experience as an argumentative bludgeon, but I have been in mental hospital with acute psychosis, a syndrome of symptoms related to schizophrenia, and which can become schizophrenia if it recurs. I have heard the evil voices. I have taken, and been forced to take, the mind-numbing drugs called major tranquillizers, and I am grateful beyond measure that they worked. I have experienced the horrible reality behind the anodyne phrase that psychotics “become confused about time”.

Going through this, the risks of being stigmatized were absolutely nowhere on my radar. All I wanted was to be helped.

In schizophrenia, as in maternal mortality, there are large differences between ethnic groups. Are the authors seriously arguing that we shouldn’t do everything we can to find out why this actually happens, because it might lead to people being stigmatized — a claim made on the basis of zero evidence? That this should be classified as between-group SBG research, which “all working-group members view… as presumptively scientifically invalid” (S34)? (We’ll evaluate this argument below.)

The report does list some potential benefits of SBG research. These could provide a counterbalance to the one-sided list of risks. But this list too is a bit weird. It is strongly focused on research which proves that genetics don’t matter much. For instance, it says “SBG research can help demonstrate the limits of genetic influence,” giving the example of research on the X chromosome. That’s a strange way to put it. Any research that provides evidence that a factor’s influence is limited must also have been potentially able to give evidence that its influence was large. (That’s what it means to count as evidence!) The wording gives the unfortunate impression that SBG research is only useful when it leads to the right conclusions. Other possible benefits include using polygenic scores as controls in randomized trials (Box 4, S24).

This all seems secondary. Surely a major point of doing SBG research is to learn why and how genetic variants cause outcomes? After all, for health outcomes, the report admits this, listing the use of the educational attainment PGI in investigating various diseases (S24). Again, this gives the — I hope misleading — impression that for the authors, health outcomes are “real” and we need to find out what really causes them; but for outcomes like education, that’s less important compared to coming up with non-stigmatizing conclusions.

One potential benefit is mentioned almost casually:

… sometimes phenotypic relationships are confounded by genes. For example, a researcher who observes an effect of parenting style on child outcomes cannot be sure that the outcomes are directly influenced by the parenting style as opposed to by genes that are influencing both the parenting style and the outcome. Ignoring the latter possibility risks getting the wrong empirical answer. (Box 5, S26)

That’s true, and as we shall see, it conceals a bit of a timebomb.

Tag-teaming genetics

What are the relative risks, right now, of overestimating or underestimating environmental contributions to intergroup differences? Which is more likely?

I think this is a no-brainer. To publish anything about genetic causes of intergroup differences, in any serious journal, would require going through a world of pain, and being held to very high standards indeed. You would have to get funded to do the research. Few funding bodies have the incentive to touch anything so explosive. In America, you might need to pass an IRB (institutional review board for ethics). Good luck with that. Then you would be held to very, very high standards of proof before it was published. If anyone from the field disagrees, perhaps they could pass on their experience.

Maybe those very high standards are a good idea! This kind of research does have risks, and it does need to be done carefully and well. Before we evaluate that, let’s just compare the standards required for publishing about possible environmental contributions.

Here are some examples. The literature on perinatal mortality points out that mothers who live in poorer areas are more likely to die in childbirth.5 This idea has made its way into the policy literature.

The association between area poverty and maternal mortality is an important descriptive fact, which researchers need to explain, and policy-makers need to address. But I think most empirically-informed and fair-minded scientists would agree that it does essentially nothing to prove a causal link between the two. This is for the usual sad reason that in social science, bad things go together: neighbourhoods and poor health and less education and younger parents — and different genetics: you know, there’s a paper about that — and, and, and…. Any combination of these things could be a causal factor.

As for schizophrenia, here’s a paper arguing, on the basis of purely correlational evidence, that one cause of schizophrenia might be “having a negative ethnic identity”. I am no expert, but I am dubious that this result (a) would stand up to the inclusion of a full set of controls or (b) will lead to useful avenues for treatment.

I would argue that it is already much more difficult to publish research on genetic than environmental causes of individual differences. Partly because you need DNA data, and most of our data sources don’t have that; but also partly because, even after fifty-plus years of behaviour genetics, the vast majority of social scientists understands genetics little, trusts it less, and is highly invested in discovering and estimating environmental causes. As for publishing on the causes of between-group differences: come on, be honest. There is just no comparison.

In this context, the Hastings report’s one-sided evaluation of the risks of genetic research throws more weight on to a very unbalanced scale.6

Crying foul

The report argues that between-group comparisons using genetics are “presumptively scientifically invalid” (S34). If that’s true, then there’s nothing to argue about: everyone agrees that bad, confounded research is pointless. Is it true, though? Here we need to get into the nitty-gritty. I’ll focus on polygenic scores, because the report does the same and they’re a very common research design.

The goal of a polygenic score is to take data representing a person’s DNA and turn it into a single number which predicts something about that person. Conceptually, it’s like a multiple regression:

… where PGSi is person i’s score, and the X’s are (say) a million different SNPs — each of them represented as 0, 1 or 2, being the number of copies person i has of the rare allele.

How do we get the βs with which to weight each X? You run a genome-wide association study or GWAS. Just think of it as running a big multiple regression to estimate them:

where Y is your dependent variable, say educational attainment or height. Then you can estimate the βs, and the PGS is your “Y-hat” or predicted value of the dependent variable. It isn’t really a multiple regression, because we have more SNPs than we have people in our data, so you need clever hacks to work around that. But the most important issues here do not hang on the details of the clever hacks. Here’s what matters:

Most of the β’s — the size of the effect of each SNP — are probably very tiny. So unless you have a huge sample, you will find your estimate of the β is swamped by noise.

As you may know, the β’s estimated from a multiple regression may not be unbiased estimates of the “true” causal β’s. In particular, if the error term ε is correlated with any of the X’s, then that will bias your estimates. There are many examples of this:

If you run a GWAS on eating with chopsticks, you will probably estimate that many X’s have a positive effect. They will be the X’s that correlate with having a Chinese background. But obviously those X’s don’t cause you to eat with chopsticks. You got your genes from your parents, and you got your culture from your parents and people around you, and you learnt to eat with chopsticks because of that, and genes cluster geographically, so the X’s are confounded.7

If you run a GWAS on income in the US and use a sample of the whole population, you’d probably find that the SNPs which correlate with African ancestry, including e.g. those that are involved in skin colour, will have a negative effect. This isn’t telling us anything new: black people in the US are poorer on average than white people, and the SNPs pick up on that.

Researchers make great efforts to avoid these unobserved confounds. They do GWAS’s within a population of a single ancestry, which is why many GWAS studies only use white people (and which gets us back to the “white person medicine” problem). They control for broad-scale genetic variation using principal components of genetic data. They often control for environmental factors also. But it is always hard to be sure that they have been successful in any given case.

Luckily, nature has given geneticists a way out of this trap. Among full siblings, genetic variation is random: they independently get either their mother’s or their father’s genetic variants, with 50% probability of each. This makes within-sibling analyses of DNA a true natural experiment. If you run the regression above with sibling group dummy variables, or demeaning variables within each sibling group, or a few other ways, you can be pretty sure that the resulting β’s capture causality from the SNP to the dependent variable. As a result, there’s a big push now to get DNA from siblings and families. Numbers are still small, but are rapidly growing.

Lastly, as with any regression, it’s important to know that genetic effects can vary across subjects and environments.

Suppose that in contemporary America, a particular genetic variant helps to predict, or even to cause, whether a woman attends university. In contemporary Afghanistan, for obvious reasons, the same variant will likely have a much smaller effect.

The same holds true across ethnic groups within a country. There’s no guarantee that causality will be the same among, say, white and black Americans. The same genetic variant can have different effects. This could be for two reasons. First, those different groups are in different environments. Second, they have other genetic variants in different proportions, and there might be interactions between the measured variants and others. So, there can be gene-environment interaction or gene-gene interaction.

Indeed, we know that polygenic scores are less good at prediction when their summary statistics are estimated in one group, and used to predict in a different one. “White person medicine” again!

If you are a social scientist, I’d like you to now perform a small thought experiment. Think for a moment about whether, given the facts above, it will be possible to learn much about any potential genetic contribution to intergroup differences. Remember: estimating effect sizes requires big samples; the independent variable of interest correlates with environments, which may be hard to measure; families provide a natural experiment; and effects may vary across subjects and groups.

Here is an approach that indeed won’t work. You could estimate summary statistics (the β’s above) across a sample of white people. You could then create polygenic scores for people of different ethnic groups using those β’s, and use them to predict the dependent variable, along with dummy variables for each ethnic group. You could see if the differences between the group means shrink after adding in the polygenic score, and you could call this shrinkage the contribution of genetics to the difference. That won’t work because it risks misattributing unmeasured environmental variation to genetics; and misestimating the effect sizes, by using the same effect sizes across ethnic groups.

But that is not the only approach. In particular, note that whenever you have family data, you can estimate the causal effect of a polygenic score within that sample, by running a within-family regression. From that, you can draw counterfactual inferences: “what would this sample look like, if it had this counterfactual distribution of polygenic scores?” For example, you can match the polygenic score distribution to that of a different sample — a different group, say — and estimate what how that would affect the differences between the groups on the dependent variable. (I wrote about this here.)

Many things can go wrong here! Your sample might not match the population you care about, because not everybody takes part in medical questionnaires or wants their DNA tested, or because you simply don’t have data for that target population. You might not notice that effect sizes vary within the sample. Also, you probably don’t know all a person’s DNA variants; maybe the ones you measure explain some intergroup differences, but unmeasured DNA variants might go the opposite way.

But these are all just normal scientific problems. The ability to generalize beyond your sample is called “external validity”. It’s a worry for almost every natural or policy experiment. Any statistical regression ever might mask some heterogeneity; it’s usually fine, because we care about average effect sizes. We don’t usually have perfectly measured data on any independent variable. And so on.

The report argues “Although within-group comparisons might always be confounded, the magnitude of the confounding will be vastly greater… in between-group comparisons.” This argument seems to confuse within-group estimation with within-group comparisons. You do within-group estimation — say, a within-family GWAS among one ethnic group — to cleanly estimate causal effects within that group. Those estimates now allow you to entertain counterfactuals: you can ask questions like “what if these guys had a different distribution of these genetic variants?” — for example, the distribution of this other group.

In fact, it is common practice to use within-group estimates to illuminate between-group differences. Take that workhorse of applied economics, the diff-in-diff regression:

where X is a treatment that gets applied at a certain time, and α and γ are time- and unit-level dummy variables. β is the estimate of the effect of X on Y, and it is estimated solely from variation within units. By doing this we control out any differences between units which remain constant across time. Nevertheless, one thing we hope to learn from the exercise is about differences between units! Between a bunch of units with and without the treatment, we estimate that β of the difference is caused by having the treatment.

We could steelman the report’s argument and say that it isn’t against research designs like the above, that aim to illuminate group differences, using within-group-estimated causal effects; it’s only against invalid research designs, which directly compare ethnic groups and draw inferences from that. If so, fine! We can all agree not to do bad research. But the report’s language is much broader — it talks about “research that attempts to compare people of many different genetic ancestries”, “predicting phenotypic differences across genetic ancestral groups” (S32), and in general “research on sensitive phenotypes that compares two or more groups” (S31). If the report authors think they were only targeting the narrower set of clearly invalid research designs, I hope they will say so publicly.

Are the scientific problems worse for genetics than for any other variable? I don’t see that. Geneticists have extraordinarily good luck: they have a potential natural experiment within every family. The rest of social science has to hunt down weird settings where independent variables are randomly allocated — elections that are won by just a few votes; the part of economic growth that correlates with rainfall; a program putting women into power in certain randomly chosen local governments; small-N randomized trials. We don’t shut down this work before it happens because it might have statistical problems. We do the work, then acknowledge the problems, or let others point them out.

There is one other argument which might be called the Turkheimer critique. Eric Turkheimer, a distinguished geneticist, is sceptical of polygenic scores: he doesn’t think they will teach us very much useful. (There are traces of the arguments around this position in the Hastings report, which he coauthored.) Partly that is because current polygenic scores don’t capture much of the causal effects of genetics — a bare 5% of variation in educational attainment, for example. Partly it is because simply having a number which predicts something about you is very uninformative about how the chain of causality works, starting with DNA molecules and ending up with you being tall, or receiving a university degree, or whatever. (As he entertainingly puts it, polygenic scores come from “blind-pig GWAS”.)

I sympathise with these points, but they are both vulnerable to the critique that they may not stay true in future. From twin studies, we think that ultimately genetics should explain (causally!) about 40% of variation in educational attainment, i.e., a lot. (Even that 5%, while it’s small in total, is pretty damn big compared to many environmental effects that we can estimate with confidence.) And behind one single polygenic score lie the millions of numbers that make up the summary statistics, one per genetic variant. Causality here is like a huge river flowing from millions of tiny sources which eventually merge in the sea. One way we might learn about it is by figuring out which of the tiny sources matter more. That is what the summary statistics can tell us.

Turkheimer might respond that these are arguments based on hope. Sure, but all scientific research is based on hope! We give uncertain hypotheses imperfect tests, in the service of incomplete research programs. That is not a reason to give up before you start.

Maybe in the end polygenic scores won’t tell us anything. But here are two alternative scenarios.

The first scenario is that we do the research, and compute the causal contribution of (a given set of) genetic variants to difference X: it’s a precisely estimated ten per cent. Would that be so bad? It would gives policy-makers assurance that 90% of the differences are environmental, and perhaps can be addressed. And it might be better in terms of stigma than a situation where people don’t know what the genetic contribution is, and simply follow their own guesses. (Still more so, if they think that geneticists have deliberately decided not to find out.)

The second scenario is admittedly extreme. We do the research on some big, morally important difference between groups, pick whatever you prefer. We come to understand the causality a bit better — that long journey from DNA to social outcomes, via increasingly complex environments. And we discover that the difference can be fixed by, oh, I don’t know, a daily glass of orange juice. In that case, a scientific community that had avoided or held back this research for years or decades would not look very wise, would it?

Yes, I understand that biology and society are complex, and that silver bullets will surely not be found in Tetrapak cartons. But the point survives. The complexity of the processes behind unequal outcomes is a reason to try to understand them using all the tools at our disposal. That is what researchers are paid for.

Audience participation

I haven’t said much about the report’s recommendations on how to conduct genetic research, because they are mostly sensible. There is just one small issue, which is the recommendation to engage with members of communities under study. In particular, the report says:

researchers should… be open to the possibility that community members recommend that the study not be conducted at all, and… researchers should explicitly solicit their views about this question.

Talking to the people you are studying is often a scientific good idea. Giving them a veto over your research is not. Consider one group which the report’s authors tried valiantly to reach, conservatives. There’s a thriving literature trying to understand conservative psychology, often in terms of concepts like resistance to change, prejudice and fears about penis size. OK, I made the last one up, but you get the idea. I find this literature pretty daft, and think of it as essentially selling cope to smug liberal elites. It’s entirely possible that it would be improved if its authors talked to a few conservatives (if they could find them). But it’s fine for the literature to exist, it doesn’t matter if some conservatives are offended, and conservatives do not need to approve it. In general, social science may entail treading on toes. (Picture Marx engaging the Lumpenproletariat community: “passive decaying matter of the lowest layers of the old society…in accordance with its whole way of life, it is more likely to sell out to reactionary intrigues”!)

Know your role

You might be both a wrestling referee, and a true fan of The Rock. Your home may be full of Rock merchandise. You may know his favourite put-downs by heart. But when you step into the ring as a referee, you put that to one side. You have to be a fair umpire of the match.

The vocation of science is similar. When you do science, you don’t drop your own views about how to organize society. But you hold yourself to the standards of the profession, including scientific honesty and a commitment to follow the evidence. I’ve written about how utilitarianism can corrupt liberal institutions, because utilitarians subordinate all their actions to a single overarching goal. Academia today is specifically at risk from this.

If almost everyone in academia shares a specific world view, then they may subordinate the practice of science to the furtherance of that world view. They may also put on collective blinkers, coming to believe that any threat to that world view is catastrophic, and must be avoided at any cost, including the cost of scientific rigour and fairness.

Alternatively, if many people in academia share a narrow world view, and if others in academia lack the courage to speak up — perhaps fearing for their grant money or position, or simply eager for the approval of their peers — then we may end up in a system of pluralistic ignorance, in which a minority dictates what “everybody thinks” and “everyone agrees”, even though it just ain’t so.

In case you think that is paranoid, after I wrote my last piece on this topic, a reasonably well-known geneticist wrote to me telling me that they disagreed, and that they wanted to cease all research collaboration with me. I wrote back, mildly pointing out that I felt my views were distinguishable from those of Hitler in Mein Kampf, and asking them the reasons for their disagreement. I never got a very clear answer. I found this episode more silly than scary. But I’m an independent researcher with enough money to live on. It is hard to believe that PhD students in this person’s group do not feel some pressure to self-censor.

The cure for these evils is for academics to clearly understand the specific norms of the vocation of science. No matter how much you like The Rock, when you wear the black and white striped shirt, you must act as an impartial referee. No matter what your political views, when you call yourself a scientist, you must pursue the truth, whether or not it is unpalatable, and hold yourself to impartial standards of evidence. None of us is perfect at this. All of us owe it to ourselves and to the institution of science to preserve it as a norm.

To take this view requires trust in the other institutions of society. You must believe that politicians will not twist your science, that the media is capable of reporting on it fairly, and that with the help of these other players, citizens can be intelligent consumers of information, not manipulable fools. This trust is in low supply in Western democracies in 2023. If you don’t have that trust, then the risk is that you subordinate everything to party preference. All that matters, in any role, becomes the implementation of the political program which — you sincerely believe — overrides every other consideration.

One possible eventual outcome is that science loses its trust as an impartial referee. The half of the population which disagrees with scientists’ politics comes to mistrust their results. They assume that they are being lied to. Twenty-first century social science becomes like nineteenth-century political economy, a set of ideas which were used to “educate” the politically dangerous working classes, and which Karl Marx would write off with wholesale contempt as “ideology”.

This is not a hypothetical possibility. One tweet in response to the announcement of the Hastings Report was just two words: “They know”. You can bet this tweet was made by a white supremacist.

To be clear, that mistrust would be at least partially deserved, and as a result, it is not the worst possible outcome. A worse outcome would be that science retains the public trust, even while scientists deliberately bias science to further their ideological goals. Whether those goals are good or bad, this would mean Western liberal democracies giving up the bet that they have taken for up to five hundred years: the bet that knowledge is worth pursuing. We would have collectively chosen to base our social order upon ignorance.

Cage fight

Overall, the Hastings document reads like a cage fight between two rival reports.

One report stands up for the intrinsic value of science, that is, of systematic knowledge about our world. It makes solid points about the potential benefits of genetic research, and has good ideas about how to pursue and present it sensitively. It quotes — but hidden in a footnote — the fine legal judgment:

“By depriving itself of academic institutions that pursue truth over any other concern, a society risks falling into the abyss of ignorance. Humans are not smart enough to have ideas that lie beyond challenge and debate.” (fn. 131)

This report is a Rock. The other report is a jabroni.

For those whose scientific training did not include exposure to WWE, the root definition of the term “jabroni”, popularized by Dwayne “The Rock” Johnson, is a wrestler whose job is to lose to established talent. That seems like a fair description for a geneticist who is afraid of letting genetics do any explanatory work.

It has a one-sided and empirically unsupported evaluation of the risks of genetic research. It underestimates the capability of genetic research to deal with group differences, effectively giving up in advance. It muddies the distinction between dangers to one particular political view, and dangers to society. And it risks creating a situation, or perhaps worsening an already existing situation, in which funders and editors use political criteria to decide what gets funded and published, and in which scientists self-censor out of fear.

Researchers in the field must support the Rock against the jabroni. We owe it to the public, which trusts us to find the true mechanisms behind desperately important social problems. We owe it to the actual victims of those problems, many of whom have far more urgent concerns on their plate than worries about what people are saying in academic conference rooms.

Ark or MacGuffin?

Let’s step back a little to think more generally about how geneticists handle these issues. And let’s switch metaphors: in Raiders of the Lost Ark, the Ark of the Covenant is an icon of dangerous magical powers, which is eagerly sought by the Nazis to make them invincible. The Ark must never be opened.

Geneticists seem to treat genetic variation as a similar source of deadly power, which they must carry around unopened, in case it falls into the wrong hands.

I have a suggestion. We should stop treating genetic differences like a mysterious, dangerous Ark, and start treating them like what they are: a MacGuffin.

A MacGuffin is a film device — an object, a suitcase or whatever, which motivates the plot and all the characters but which is actually unimportant in itself.

I call genetics a MacGuffin not because they can’t predict or cause individual differences (they can), nor because they can’t predict or even cause group-level differences (mostly, we don’t yet know). They are a MacGuffin because they are not fundamentally in a separate category from any other cause of individual or group-level differences. Let’s go through some of the putative reasons why people think otherwise.

“You can change the environment, but genetics are fixed at conception and unalterable.”

It’s true that DNA is fixed at conception, and we can’t change it (yet). But we can do plenty of things to change its effect. (Famously, myopia is heritable, but we can wear glasses.) Also, lots of things are fixed early and unalterably:

Your number of elder siblings is fixed at birth. Elder siblings reduce the time and effort parents can spend on you. Effects are large: in one paper, second siblings lose 3 months’ education compared to first siblings. Our working paper shows similarly large effects. Social scientists cheerfully publish these estimates, ignoring the concern that younger siblings like me may be stigmatized by our elder brothers.

Birth weight and conditions in the womb. Ditto.

Culture is picked up early in life from parents and peers, varies across ethnic groups, and is notoriously hard to change.

Investments in very early education have the largest returns, according to James Heckman. These are under political control, but for any adult individual, it is by definition too late.

“Genetics is natural, so ascribing people’s characteristics to genetics risks treating them as natural.”

First, see above about how simple interventions can change outcomes even if their causes are natural. Second, this argument assumes what it proceeds to argue against — and it’s wrong.

Sure, genetics are biological in the sense that DNA works by creating proteins and altering human biology. But (a) as the Hastings report points out, these effects are very often subsequently mediated by the social environment. If DNA affects your skin colour, that’s biological. If people then discriminate against you based on your skin colour, that’s social.

Also (b) genetics — specifically, the distribution of genetic variants in a society — are not unaffected by social and historical processes. People move in response to economic conditions, and they bring their DNA with them into different areas. (There’s a paper about that.) Some people have more children than others, and this affects both the means of polygenic scores and how they covary with the social environment. (There’s a paper about that.) People marry and/or have children with other people, and their children get their parents’ DNA: this takes place in a society’s marriage institutions, not in a petri dish. Social advantages, like being a first-born child, can even affect whom you marry, and thus your children’s genes. (Oh hey! There’s a paper about that!8)

The solution to people treating genetics as “natural” is to point out that it isn’t. The way out is through the door. Why will noone use this method?

“If genetic differences between groups are real, this will justify racism.”

Here we are getting at the heart of the matter. I think this argument is what motivates the report’s statement that “the costs of a result that concerns existing stereotypes against already-minoritized groups would be vast” (S35).

I will give this argument half a point out of two. Where it doesn’t get a point is in the idea that existing patterns of socio-economic inequality will be substantially changed if people think that individual outcomes are partly under genetic control. This argument seems to imagine something like the following pair of scenarios.

Scenario 1

PROSPECTIVE BOSS: And now, Bob, tell me about your educational qualifications.

BOB (a member of a minority): Well, sir, I have D’s in GCSE Maths and English.9

BOSS: Hmm. That’s not very good for this role... Tell me, were your problems with education caused by the environment, or were they genetic?

BOB (crestfallen): I’m afraid they’re partly genetic.

BOSS (shaking his head): Then there’s no place for you here.

Scenario 2

As before, until:

BOSS: ... Tell me, were your problems with education caused by the environment, or were they genetic?

BOB (brightening): Oh, completely environmental! My school had huge class sizes, and I grew up in a one-bedroom flat, not speaking English, with an abusive alcoholic step-parent.

BOSS (genially shaking his hand): Why then, we can fix that up in a jiffy! Welcome aboard!

I hope it is obvious that this isn’t how things work, and why not. Most social actors evaluate and/or reward people based on their actual characteristics, and treat their root causes as beyond their remit. Employers hire people for their skills, exam boards grade pupils based on their performance, courts make the punishment fit the crime. None of them concern themselves with how these things came about, and that will remain broadly true.

Here is where this argument gets half a point: it’s possible that, if people believe group differences are genetic, they will start to listen to racists, and will lose faith in social programs which are designed to equalize outcomes by changing the social environment.

But it still only gets half a point, for three reasons. First, if you define racism as a belief that genetics contributes to group differences, then you have put your definition in hock to a testable assertion. If it later turns out that maybe genetics do contribute to group differences, and people parse this as “oh, the racists were right all along!”, then you will have painted yourself into a very nasty corner. If you think that might happen, wouldn’t it be a good idea to stop painting sooner, rather than later?

Second, no results from genetics will overturn the existing, rather solid research on discrimination from e.g. job application experiments. It’s even possible that this genetic differences could exacerbate these effects and/or vice versa.

Lastly, suppose that genetics do contribute to intergroup differences, say in schizophrenia diagnosis, or death in childbirth, or educational attainment. And suppose you mistakenly believe that they don’t. So you see differences, and you try to solve this by equalizing environments. Unless there are very strong gene-environment interactions, this will not completely work. Even if you equalize environments completely, some differences will remain.

You are now in a feedback loop. Your initial attempts to make environments equal have not made outcomes equal. So you assume you have not tried hard enough. You do more to equalize environments. But outcomes still don’t equalize. You take more extreme measures. And so on. At some point, you are going to generate political pushback, and that pushback is not guaranteed to come as a mild liberal protest. It may also wear a MAGA hat. Or worse.

The counter-argument is that if people believe genetics contribute to intergroup differences, then that will justify every kind of inequality, and we will return to the days of open discrimination.

That argument is interesting, because we already have one form of intergroup difference where we acknowledge genetics matters. That is society’s basic faultline: the difference between rich and poor. Numerous papers point out the heritability of income and wealth. Others use polygenic scores to predict income. Academics differ about the relative contributions of genetics and environments, but I know of no mainstream work arguing that genes do nothing, and you won’t get cancelled for saying that genetics matter.

This evidence has not done much to discredit redistribution, and I doubt it will do so in future. After all, as Paige Harden has pointed out, a reasonable response is that genetic disadvantage should be compensated for. While strictly genetic arguments are relatively recent, the idea that there are differences in talent, and that we need to support “strivers” who are not highly gifted but who work hard, has long been common in political arguments about the welfare state.

The case of income and wealth suggests that acknowledging genetic difference does not destroy the case for redistribution or equality. It might simply make the debate more levelheaded, since arguing for equality will no longer require accusing individuals or institutions of discrimination.

To put it another way, the “vast costs” of a non-null result in this area are really no different from the point the report made:

… sometimes phenotypic relationships are confounded by genes…. Ignoring the latter possibility risks getting the wrong empirical answer. (Box 5, S26)

It is weird to argue simultaneously that this is useful, and that it would be a catastrophe.

IT DOESN’T MATTER WHAT YOUR INDEPENDENT VARIABLE IS

To make the MacGuffin approach more concrete, here is a simple, specific proposal for how we should evaluate genetic research in future:

Principle of symmetry: research into genetic causes of inter-group or inter-individual differences should be treated the same way as research into any other causes.

The symmetry principle has obvious advantages. It is easy to understand. It is also relatively easy to apply. For any proposed research, ask: “how would we evaluate a research design like this, if it were not investigating genetics but something else?” Lastly, it is fair: it does not bias the scientific process in favour of any class of explanations.

Here’s an example: a research proposal plans to explore differences in age at first birth between two ethnic groups. The research proposal should be evaluated in the same way, whether the independent variable of interest is genetic differences, cultural differences, or something else.

Of course, there are issues specific to genetics, like “What DNA array chip was used to capture common genetic variants, and did the process proceed reliably?” or “How will you control for population stratification which might confound your results?” But at a high enough level of abstraction, these issues typically have analogues in other forms of research — like, say, “what survey instrument was used to measure subjects’ attitudes, and did the interviews proceed reliably?” or “how will you control for cross-country differences which might confound your results?” It should be possible to gauge the seriousness of these issues on metrics which are independent of the particular form of research. Indeed, funding bodies are used to evaluating competing proposals with widely differing research designs and topics. They do not need a special yardstick for genetics.

The principle does not propose that genetic explanations should always be given “equal rights”. If you want to use the genetics of beat synchronization to explain the making of John Coltrane’s A Love Supreme, then you are wasting your time. But a priori implausible explanations should be rejected because they are implausible, not because they are genetic.

If you put high weight on the risk of stigmatizing minorities, you can still apply the principle of symmetry. Just evaluate all research on these grounds. For example, if somebody plans to develop an atlas of cultural variation, or compare second generation immigrants to the US, you can ask ethics committees to consider the risks of identifying cultural traits (say, work ethics or discount rates) in a stigmatizing way. I am suspicious of these arguments, and hope they will receive pushback from social scientists. But let’s apply them equally.

A new challenger has entered

I’ll finish with some broader points.

As the careful reader will have spotted, I have made no claims about whether, or how much, genetics contributes to any intergroup difference. This is because I don’t know, and I do not want to speculate irresponsibly. My argument is just that we should try to find out.

It’s easy and natural for researchers to start to root for “their” hypothesis. I’m equally fine if genetics matter a lot to any given differences between individuals or groups, or if they don’t.

No matter how traumatic their social effects, discoveries in genetics can never vitiate the fundamental moral equality and dignity of human beings. Also, the best way to judge people is as individuals. One reason I am not frightened of opening the genetic MacGuffin is my confident belief in these simple liberal principles.

My views are my own, not those of my co-authors or colleagues.

Writing this may make me enemies, but I worry more about the friends. It is the job of every political side to police its own extremists and nutcases. Experience teaches that I can write something I think is impeccably fair and decent, and find myself being cited/retweeted by people with biographies like “father, baseball fan, loves pizza and white nationalism”. So, be aware: if your Twitter feed is full of race-baiting; if, after a deranged white fanatic murders several black strangers in cold blood, your reaction, before the bodies are buried, is some miserable piece of whataboutery; if you can’t write an article without a snide dig at “urban thugs” or whatever, as a way to be nasty about black Americans without having the guts to do it openly; then you are no friend or ally of mine, and you need to take a long, hard look in the mirror.

One last thought. Is this whole debate fighting the last war? It seems very unlikely that in the democratic West, we will return to the early twentieth century version of bureaucratic, state-based eugenics, with its intellectual ties to progressivism and fascism. But we are already, right now, experiencing the development of a decentralized, choice-based, neoliberal version. You can have your embryos screened for polygenic scores and pick which one to fertilize. A recent post on the Lesswrong forum explains how to have polygenically screened children:

That may sound amazing. It may sound like science fiction. It may even sound horribly dystopian. But whatever your feelings, it is in fact possible. And these benefits are available right now for a price that, while expensive, is within reach for most middle-class families.

Companies like Genomic Prediction offer this service. There is apparently plenty of demand.

I don’t know what to think about this. Intuitively, I find it gross: it smacks of a dislike for humans as they actually are. But some advocate might say “no, we’re going to make everyone smarter and healthier, why is this a bad thing?” In any case, I would expect that academics who were deeply concerned about genetic ethics would be speaking out loudly on this topic, for or against. So far, I have not seen much sign of that.

If you enjoy this newsletter, you might like my book Wyclif’s Dust: Western Cultures from the Printing Press to the Present. It’s available from Amazon, and you can read more about it here.

You can also subscribe to this newsletter (it’s free):

The report seems to support this interpretation on S33: “Because it is a foundational principle of human subjects research ethics that studies should be conducted only when they are validly designed to answer a meaningful question, when this criterion is not met, there is no need for additional scientific and ethical analysis.” In other words, the (only) cause not to do between-group comparisons is that they are bad science.

Some of the working group have further criteria, i.e. they believe even acknowledgedly valid science ought to be vetoed. I mostly won’t address that argument, because I kind of think those people are beyond persuasion, at least for now.

R-squared measures how much of the variation in a phenomenon you are able to explain.

In point of fact, according to this paper , black people do have relatively more twins than whites, but they have relatively fewer triplets and higher-order births. The causes of multiple births are partly sociological: they are more likely with IVF, and rates increased among older women from the 1980s onwards.

Corneille et al. (2023) describe the phenomenon of the “selective appeal for rigor”, which they describe as “to demand stronger evidence from a researcher with a competing perspective than the author demands from themself”.

I dunno, maybe this is wrong! Maybe someone will do a within-family GWAS on using chopsticks and find that some SNPs really are causal. That would be very funny and absurd, but it is also very unlikely.

I mean, there are lots of papers about all these topics, but I am bigging up our research agenda here.

In America: a GED.

Great essay, thank you

Man all this work and so few comments!

This entire piece is very well said. I'll be thinking about it for quite a while. Thanks.