I had an interesting conversation yesterday with social scientists in different disciplines. Some were qualitative researchers, others were quantitative, and as usual it was quite hard to speak across those lines.

Qualitative guys sometimes talk about scientism, meaning something like “an ideology which pretends to be scientific, or uses the status of science to make illegitimate claims”. I’m going to try to give an account of scientism, from the perspective of a quantitative researcher. Doing this might help quantitative people think about the limits of what they do, while qualitative researchers might find it expresses some of their concerns. I don’t claim anything here is deeply original! It just represents how I think about the issues. I’ll also suggest a possible solution to what

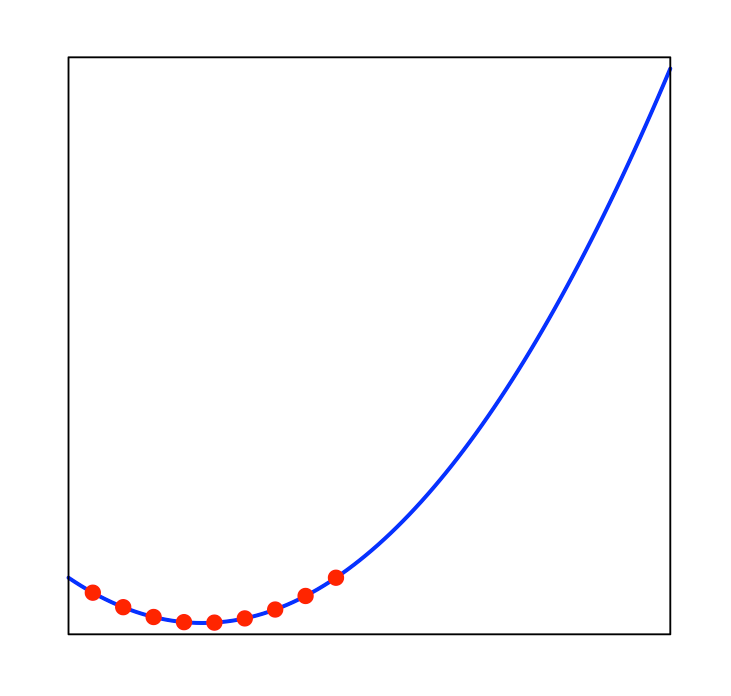

Here’s a simple, abstract picture of some social process. Maybe it’s the change in GDP of a country, or the number of pizza delivery drivers in an area. Maybe the x axis is some other interesting variable — the number of doctors in the country, say — or maybe it’s just time or space.

This is a nice, straightforward process. It’s a quadratic curve. Maybe you measure a few points on this curve (say, you count the number of pizza drivers over a few months). You’ll quickly see the curve:

And you can extend that curve, and you’ll be right! This is great! You can predict the future!

Of course, no real science works like this. There’s always randomness and noise, even for physicists, let alone for something as complex as society. Here’s a more realistic picture of the data.

That looks much messier. But in a sense, the mess is an illusion. I created the data above by just adding random noise to the original, smooth curve. You can see that if I plot the original curve over it.

A social scientist who measured enough data points could approximate the original curve, not perfectly, but pretty well, and they’d never be too wrong about predicting other data points.1 Here’s an example: if you measure 25 of the first 50 data points and draw a curve through them (red dashes), you get close to the true underlying curve (in grey).

The above is roughly what most social scientists claim to be doing. Why does it work, in our toy world? Well, the underlying process — the smooth curve — is the same everywhere. It’s governed by the same simple equation. If you look at one part of the curve, you can use it to predict the rest. Sure, there’s noise, but we can use statistics to iron the noise out.

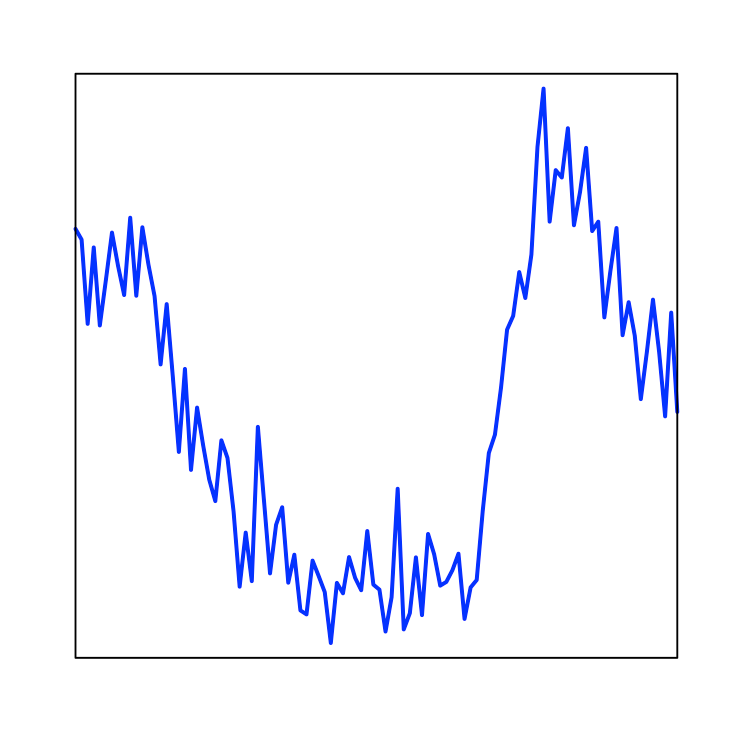

Now here’s another abstract picture of a real-world process.

This underlying process is messier. It is not a beautiful quadratic curve, it’s just a set of lines connecting some random values. If we add some noise to it, it gets messier still.

This may look quite like the first graph with noise (it’s a wiggly line!) But data like this poses much more basic problems to the scientist. Here’s what can happen if you measure a bunch of these points and draw a line through them.

I’ve assumed here that the scientist measured enough data to get the line exactly right in the times and places where he measured it. This is a best-case scenario! The orange line is exactly the same as the underlying process, i.e. the flat line in the previous graph. But as soon as you move outside that local area, the line rapidly becomes way off, and very misleading as a predictor.

That’s not surprising. The underlying process is not the same everywhere! The slope of the line where you estimated it can tell you nothing about the slope anywhere else on the graph. While we are in the orange part of the line, we are accurate because we have got the right model of the world for that time and place. But move to the left of the orange line segment. As we do so, for a little while our predictions stay accurate, simply because the line is continuous and doesn’t change too fast. We may think our model is still working. But we are kidding ourselves; we are accurate just “by chance”. Soon the data will be far off our predictions. Now, look to the right. There, our model fails suddenly and catastrophically. This is more painful, although at least we learn fast that we were wrong.

Scientism

This is my basic mental model of scientism: people finding locally accurate generalizations, and talking as if they have discovered deep scientific laws.

It’s worth contrasting with other kinds of scientific pathology. There are stories about p-hacking, publication bias, and researcher degrees of freedom. There are models where the world is mostly random noise, 5% of research teams get a significant result at p < 0.05, they publish it, most scientific research is false. None of that applies above. The researchers who look at their local time and place get enough data and build an accurate model. In some sense they are doing good science. And it works! It’s predictive — until it isn’t. Those other pathologies are real, but they are different from scientism, and even fixing them would not protect us from scientism.

Here’s a basic intuition for why I think scientism is widespread: if you read the abstracts of social science articles, there’s a lot of common nouns flying about. For example, I once attended a talk about unrecognized states, i.e. places like South Ossetia and Abkhazia. Many are post-Soviet and backed by Russia; also, the entire list of unrecognized states is less than ten. The speaker probably had lots of interesting things to say about these states, and I’m sure there are commonalities between them. But the talk was couched in the language of scientific generalization and theory-building, as if this sample (with, again, N = 10) were just a slice of a huge unrealized world. Which raises the question, what are we aiming to generalize to, here?

Once you spot this pattern it is everywhere. Picking a recent article in the American Economic Review: “Who Benefits from Online Gig Economy Platforms?” I haven’t evaluated the work — I’m sure it’s good — but you can skim this paper and not find a single name of a gig economy platform. They don’t say which platform they did their research on, which may be due to reasonable privacy or legal or ethical concerns, but they also simply don’t mention Uber or any of the others. I asked Claude and it guesstimated that Uber, Lyft, Doordash and Instacart have about 10 million workers in the US. That seems enough that a lot of “the gig economy” might be driven by choices of individual companies!

From the same issue, there’s “Leaders in Social Movements: Evidence from Unions in Myanmar”. Again, the text of articles like these is typically careful to point out the risks of generalization, how Myanmar might be special, and so on. But look at the framing of the abstract:

Social movements are catalysts for crucial institutional changes. To succeed, they must coordinate members' views (consensus building) and actions (mobilization). We study union leaders within Myanmar's burgeoning labor movement. Union leaders are positively selected on both ability and personality traits that enable them to influence others, yet they earn lower wages. In group discussions about workers' views on an upcoming national minimum wage negotiation, randomly embedded leaders build consensus around the union's preferred policy. In an experiment that mimics individuals decision-making in a collective action setup, leaders increase mobilization through coordination.

The avowed purpose of the article isn’t just to study union leaders in Myanmar (a country with 50m people, so its politics is an important topic in its own right). It’s to study “social movements”. That’s a big-tent concept, which might include medieval millenarian cults, Victorian teetotallers, or modern effective altruists. Why would we even set out to learn about something so strangely abstract?

“Cargo cult science”

I won’t show more examples, because it’s easy to find your own: pick any journal. One thing that seems to be happening here is what Richard Feynman called “cargo cult science”.

In the South Seas there is a Cargo Cult of people. During the war they saw airplanes land with lots of good materials, and they want the same thing to happen now. So they’ve arranged to make things like runways, to put fires along the sides of the runways, to make a wooden hut for a man to sit in, with two wooden pieces on his head like headphones and bars of bamboo sticking out like antennas—he’s the controller—and they wait for the airplanes to land. They’re doing everything right. The form is perfect. It looks exactly the way it looked before. But it doesn’t work. No airplanes land. So I call these things Cargo Cult Science, because they follow all the apparent precepts and forms of scientific investigation, but they’re missing something essential, because the planes don’t land.

An interesting thing about this speech (which you should read if you are any sort of researcher and haven’t already) is that Feynman is not criticizing the qualitative, diary-writing, auto-ethnography side of social science, but the stuff that is meant to be rigorous, with p values and other such paraphernalia, like psychology experiments. And he presciently points out many of the problems with this research, including p hacking and publication bias. But even without all that, I think Cargo Cult is the right description. The universe is made of uniform material stuff. Hydrogen atoms behave the same everywhere. At least, that is the basic hypothesis of physical science, and so far it seems (miraculously?) to hold true. Social scientists want to find the equivalent of atoms: objects with universal laws. So we cargo cult ourselves into big abstract nouns: “gig economy platforms”, “populism”.

And then sometimes when things change, there’s a nervous little shuffle. In political science, there was “democracy and dictatorship”, and an associated literature and generalizations. Then it became obvious that non-democracies were not always “dictatorships” in a meaningful sense: even Erdogan or Xi are not quite dictators (though Putin is probably close). So the concepts changed to “democracy and autocracy”.

Studying autocracy is important, and generalizations are helpful! Erdogan, Xi, Putin and other modern day autocrats are obviously like each other in some ways, they learn from each other and help each other, and we should try to have good mental and discursive models of how they tick. What researchers seem not to like thinking about is that this work is not very different from history, or journalism. It means understanding an eddy in the great stream of history, which has come and will very likely pass, quite soon in the grand scheme of things. Gathering data sets and reporting p values may (may!) make this work more rigorous than what journalists or historians do. But it doesn’t change it fundamentally. If you don’t acknowledge this, you may end up doing like my last diagram: drawing a straight line on a messy graph.

To be clear, I think that most good researchers know that papers on, say, populism (1, 2) are not studies of a human universal. But they write as if they are, and that is really the problem. It means that what social scientists claim to be doing is out of sync with what they actually know they are doing. This hinders science. It means we can’t have rigorous public discussions of methodology, based what we are actually doing. At worst, it can become a kind of nudge-and-wink collective fraud, on ourselves and on outsiders who take academic work seriously.