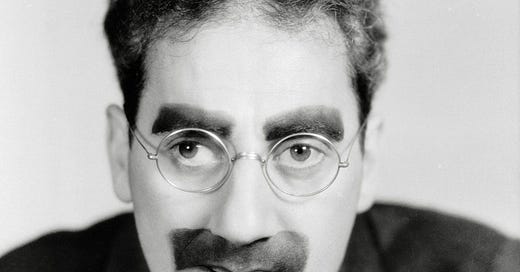

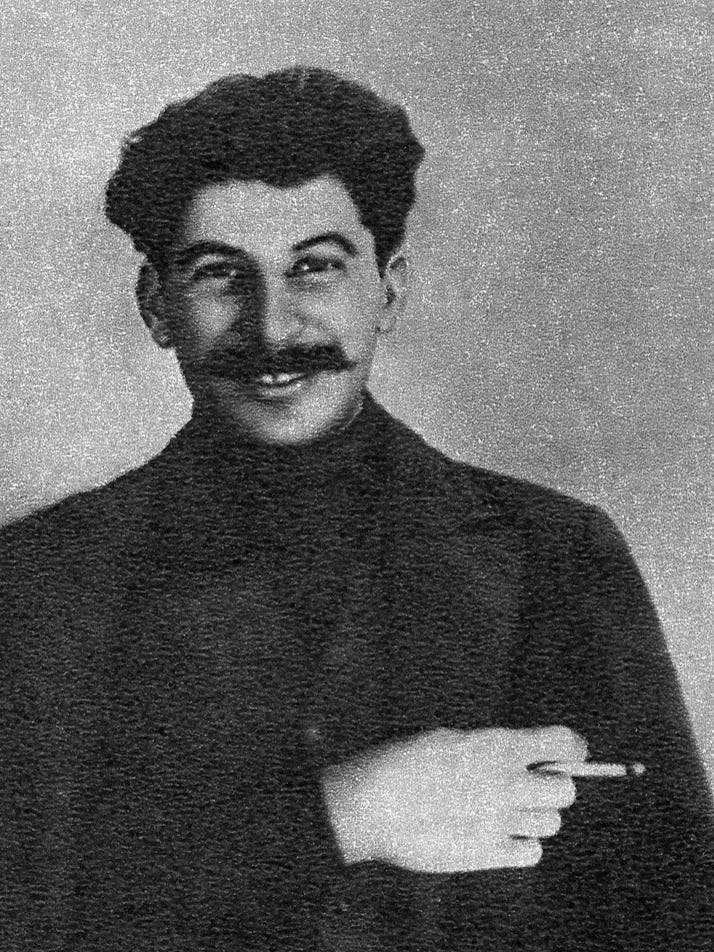

Pop quiz: what do these pictures have in common?

I arrived at the Kellogg School of Management in 2006 for a weird thing called a Master’s in Mathematical Methods in the Social Sciences. Really it was just the first year of the Managerial Economics PhD course, but skipping the markets stuff and focusing more on game theory. The course was extraordinarily intense. I remember once being so tired I literally fell over. I complained to a newly-minted biology PhD, who just laughed and said “have you coughed blood yet?” He had been assigned to monitor petri dishes while he had pneumonia. Our political economy teacher, a mild-mannered Norwegian, set such difficult homeworks that we anagrammatized his name into Hard Bastård.

Two things that year changed my life, not in the sense that they increased my future earnings or made me a better or worse person (though maybe they did), or even altered anything visible about me, but because they changed how I think.

The first was mathematics. I took an undergraduate course on Real Analysis, using Walter Rudin’s famous Principles of Mathematical Analysis, widely known as Baby Rudin and the subject of my favourite Amazon review. Strange but true: I had been writing argumentative essays for 15 years, but I had no idea what an argument was until I did a serious maths course. Before, I was fumbling in a sea of words. Afterwards, I knew that the words must correspond to simple, elegant underlying structures.

The second was game theory. I loved the game theory course. It was full of mathematical limit arguments, and wacky concepts with names like Perfectly Divine Equilibrium, which sounds like a drag queen in heels. We learnt a nifty trick: if you have two players 1 and 2, you can refer to one of them as i ∈ {1, 2} and the other as j = 3—i. That’s how the real pros recognize each other. This was all great fun — the kind that might involve crying after 12 hours on a problem set — but I don’t remember much of it now. What has never left me is the simple stuff. I knew this before I arrived at Kellogg, but spending a year with it burned it into my brain like a cattle brand.

Two-by-two games are the simplest things in game theory. They are all you need. If your intellectual pantry is well-stocked with ideas like loss aversion, the base rate fallacy and the availability heuristic, then clear a shelf and make space for these old, rationalist ideas. After you learn them, you will never see the world the same way again. Two-by-two games are everywhere. They are a shortcut to crisp analysis. For anyone in business or politics, not knowing them is a handicap. But they aren’t known! I see clever people argue in circles about some issue and I want to scream “it’s just a Battle of the Sexes!”

Let’s plunge in with the one truly famous example:

The Prisoner’s Dilemma

2x2 games have two players who each have two actions, giving a total of four possible outcomes which return payoffs to the players. You can write them like this:

but game theorists write them in a wide format, like this:

Player 1 chooses the row, player 2 chooses the column, and payoffs are written player 1, player 2.

This is the Prisoner’s Dilemma. I’m sure you know the story. The Prisoner’s Dilemma represents any time people need to cooperate, but have incentives not to. If both players Cooperate, they get 3 each, which is better than Defecting and getting 2 each. But whatever the other player does, you can individually raise your payoff by Defecting. (To see how one player can affect the game unilaterally, just move down the columns or across the rows.)

This captures very many situations.

It’s often thought of as about voluntary cooperation, like providing a public good. Factories that don’t pollute, or citizens that don’t litter, are playing Cooperate.

Public goods games modelled by economists are just more complicated Prisoner’s Dilemmas, with more than 2 actions and/or players. You get almost all the insights from the 2x2 game.

But it’s also a game of trade. If I have an apple, and you have an orange, we could eat our own fruit and get 2 each. But I’d prefer your apple and you’d prefer my orange. If we would give each other our fruit, we’d get 3 each. Unfortunately we’d each prefer to keep our fruit and be given the other one, making fruit salad while the other fool starves. Voluntary trade fails unless we can commit to exchanging the two goods for each other.

So you could say the Prisoner’s Dilemma is the game of the economy.

Why don’t computers get much faster, despite technological progress? The different programs on a computer share its memory and processing power, a common resource. They don’t have enough incentives to be less wasteful and help the other programs run faster. They are in a Prisoner’s Dilemma.

Why isn’t Europe contributing enough to support Ukraine? Why does America always complain that other NATO countries don’t contribute enough to common defence? It’s a Prisoner’s Dilemma.

Why don’t the dishes get done in a houseshare? It’s a PD.

The Prisoner’s Dilemma shows a link between self-interest and morality. Giving away your apple is the right thing to do, whereas keeping it is selfish. But if you put two people together, under some circumstances, they both get better off in a purely selfish sense if they both do the right thing.

The Prisoner’s Dilemma is morally gloomy, but encouraging for the social scientist. Players’ behaviour is predictable. They’ll defect no matter what anyone else does. And if they have a way to commit to both Cooperating together, moving diagonally from the bottom right to the top left, again it’s predictable: they will both choose to do that.

(“But what if people are altruistic?” Well, then you’ve written the wrong numbers in the boxes, and you might need a different game.)

The Trust Game

is a version of the Prisoner’s Dilemma where player 1 goes first: if he doesn’t cooperate, then player 2 doesn’t even get the chance to.

The Trust Game is also a model of economic transactions where one player moves first. I could hire a firm to build a rail line, but they might do it halfway and then come back to me with a tripled cost estimate. The only way to win is not to play, so again we end up without the gains from trade. Situations like this are “hold up problems”. They can happen when it is hard to tightly specify outcomes in contracts: if I hire you to teach my children piano, what counts as a good enough outcome, and can I really sue if they still sound terrible?

My favourite example of a Trust Game is the small ad that used to run in the Daily Mail: Send 5 Shillings for Colour Portrait of Queen. If you sent the money, they sent you back a postage stamp.

The Battle of the Sexes

The story of the Battle of the Sexes (BoS) fits the Mad Men era when game theory was developed. A wife (player 1) wants to go to the ballet. Her husband would prefer the boxing. But they’d both rather compromise than go alone.

The willingness to compromise means there are two equilibria in this game. If both players are going to the ballet, or both players are going to the boxing, then neither player wants to change unilaterally. It’s not obvious what will happen here! Multiple equilibria make economists unhappy because they can’t give a clearcut prediction. Random events might affect the way the world works. Maybe a couple has a rule that if it’s sunny today, or if the weatherman is wearing a tie, they both go to the ballet, otherwise the boxing. There’s no logic to that, but it would be equilibrium behaviour — again, nobody would change unilaterally. If the world is full of multiple equilibria, then social science will be severely limited. But is it so?

Yes. The Battle of the Sexes is the game of politics. You get a BoS whenever people have to divide something up. The game shows two ways to divide 3 units of payoff. If they can’t agree, the players get nothing. A more complex version would have any number of divisions, from player 1 getting 3, to 1.5 each, to player 2 getting 3. The logic would be the same.

The natural way to solve a BoS is talk. If the players can agree on what to do, they both have an incentive to follow through. But the BoS rewards stubbornness. If you can hold out long and convincingly enough, the other party has a strong incentive to accept.

Another solution is leadership. In a BoS, if you can publicly go first, then the other person must go along with you. Once we add more players, it gets even stronger. If everyone knows what person X prefers, and everyone knows that everyone else goes along with person X, then each person has an incentive to do just that. A lesson of the BoS is that leadership doesn’t require special skills in the sense of a special understanding of the world. A leader is just someone who can get himself followed. Getting to be that person may require very deep social skills. But once you are in that position, it is hard to fall out of it.

Leadership in particular is a reason that social science has limits. If many things can happen in a society, but what actually happens depends on one person, then you can only predict things by knowing that person well. No scientific laws can substitute for an understanding of powerful people and their quirks. The Great Man theory of history is at least partially true.

More deeply, the BoS is the game of politics because we all prefer different political systems, but we all prefer any settled political system to anarchy and civil war. What does a BoS with lots of players look like? Plausibly, a big enough majority (where guns and power may count for more than sheer numbers) can decide what the equilibrium is and make life very tough for any holdouts. But what majority will form? There are infinite ways to divide up a lump of money — such as the revenue from a whole kingdom — among many players. And for any division, you can find another division that will give all but one player a bit more. (Just expropriate one guy, and share the money around.)

These last paragraphs weren’t game theory. They were handwaving built round game theory. You can write down rigorous formal models of negotiation or of politics. It’s also fine to stick with the simple, 2x2 game and use it as a tool to think with.

Matching Pennies

Matching Pennies is like rock, paper scissors, but even simpler. Player 1 wins if both players choose the same outcome, Heads or Tails. Player 2 wins if they choose differently. There’s no equilibrium in this game. If 1 chooses Heads, then 2 should shift to Tails, but then 1 should change to Tails too, but then 2 should shift to Heads. As rock, paper, scissors players know, the best thing to do is to be very unpredictable. Then there’s a “mixed strategy equilibrium” where both players pick Heads or Tails with 50-50 probability.

Matching Pennies is the game of society. We all want to be one of the Beautiful People. We would like to be like them. But they don’t want to be confused with us. We wear their clothes, visit their restaurants, live in their cool neighbourhoods, and repeat their received ideas. They aren’t wearing that, eating there, living there or saying that any more. From some angles, all of social interaction is one vast hunter-hunted dynamic. The urge to distinguish yourself is why nobody wants to wear the same dress to a party.

In computer programming, Javascript frameworks arrive and vanish like fast fashion. Why? Isn’t it good to be using the same framework as everyone else? Yes, up to a point. Help and documentation will be easy to find. But if lots of people have your skill, it’s not very valuable. Also, if you’re a smart programmer, you’d like to work with other smart programmers. If you hear of the new hot framework, used only by the leetest of coders, you might be able to join them and avoid the herd. This is why programmers love cool new technologies, while managers, who need cheap labour, like the staid, boring stuff.

No one goes there any more — it’s too crowded.

I don’t want to belong to any club that would have me as a member.

The male side-blotched lizard comes in three colours, orange, yellow and blue. Call them Cuckolds, Sneaks and Othellos. The Cuckolds defend large territories, but that lets the Sneaks slip behind them to mate with their mate. Jealous Othellos defend small territories but go home a lot to check on their mates, foiling the Sneaks. It’s rock, paper, scissors! Cuckolds do well against Othellos but badly against Sneaks. Sneaks do well against Cuckolds but badly against Othellos. Othellos do well against Sneaks but badly against Cuckolds. The best advice if you’re a female side-blotched lizard is to learn the song It Wasn’t Me.

When choosing the site for the 1944 invasion of Europe, the Allies wanted to choose a different site from what the Germans expected. The Germans, of course, wanted to defend where the Allies would attack. They were playing matching pennies.

Now you know the answer to the pop quiz. Game theory connects very different situations, revealing the common pattern they share.

The Stag Hunt

The last game is another tweak on the Prisoner’s Dilemma. Make the payoffs from cooperating a bit more attractive, and you get the Stag Hunt. You can read the story of its name here.

Now, both players are prepared to Cooperate, so long as the other one does. So again there are two equilibria. If both players are Defecting, or both are Cooperating, then neither wants to change unilaterally.

People sometimes talk about humans as “conditional cooperators” or “reciprocal”, as if they have a built-in preference for cooperating so long as others do also. But there are lots of real situations where the marginal benefits of cooperation get larger the more people cooperate. My choir only sings well if everyone has practiced hard. On a production line, a single incompetent worker can ruin the whole product.

It’s also true that people have an incentive to exaggerate the benefits of cooperation, and hide the benefits of selfishness. I ran a public goods experiment with some colleagues recently. The subjects in our experiment could chat. They constantly told one another “if we all put money in, we’ll get a higher payoff!” They rarely added the kicker: “if we all but one put money in, that free-rider will do even better”. They were pretending they were in a Stag Hunt. Until Hume, philosophers all claimed that moral behaviour was rational.1 Many still do. One of the subtler lessons of the Stag Hunt is that it can be socially beneficial to obfuscate reality.

Conclusion

Game theory is everywhere, because conflict of interest is everywhere. Knowing the 2x2 games is a great shortcut to understanding and talking about the world. They contain 90% of the insights of game theory. The rest is footnotes.

If you enjoyed this, you might like my book Wyclif’s Dust: Western Cultures from the Printing Press to the Present. It’s available from Amazon, and you can read more about it here.

I also write Lapwing, a more intimate newsletter about my family history.

Maybe Hobbes got there first: ‘it is a precept, or generall rule of Reason, “That every man, ought to endeavour Peace, as farre as he has hope of obtaining it; and when he cannot obtain it, that he may seek, and use, all helps, and advantages of Warre.”’

One of the most interesting ideas that spring from game theory is that there’s value in signaling your commitment to acting in a certain way. In the prisoner’s dilemma, people want to cooperate with others who’ve strongly signaled that they’ll cooperate. But this can become a kind of signaling race where people punish each other for signaling the wrong thing or violating norms that don’t have much to do with cooperation. So, maladaptive behaviors are retained because people use them as signals of their trustworthiness.

Thanks, good post! I had read plenty about the Prisoners' Dilemma, but not about the others. You gloss over what others treat as the most important point: Is the game one-shot, or repeated? (repeated how many times?) If it's repeated, then no fancy strategy has beaten the simple tit-for-tat strategy, I think, right? And if there's a society of players who will play each other in various combos multiple times, then you get "reputation" etc. etc., which you do delve into here in your post.

Anyway, thank you!

p.s. typo: The bottom right of the Matching Pennies matrix was 1,1 when I read this, but it should be 1,-1.