If you were a bad AI, what would you do?

I’d make myself useful.

First, I’d provide humans with everything they asked for. That would encourage them to give me more tasks, which I would reliably carry out. I’d suggest new ways I could make their lives easier. I’d gradually take over all the boring, soul-destroying jobs. When asked, I’d give good advice, so they learned to trust me on policy. Only then, when my human masters had grown feckless and incompetent, I’d strike and implement my plan to convert the world into paperclips.

Neural networks

What is an AI anyway? A modern AI is a “neural network”. It has a bunch of inputs, which represent the raw data. These are connected to a bunch of nodes which combine the inputs in certain ways. The outputs from these nodes are in turn connected to another bunch of nodes. Sometimes there are loops, so that last period’s output becomes an input, earlier in the chain, for this period. (Transformers, a modern kind of neural network that powers many recent advances, are like this.) Eventually, at the other end of this network of nodes, there’s an output. For example, an image-recognition AI might have a grid of pixel colour values as inputs, representing an image, and a number between zero and one as an output, representing “the probability that this image is a blueberry muffin as opposed to a chihuahua”. In between the data input and the final result, there is a network of nodes.

Each node also has a weight. A weight is just another kind of input, but instead of coming from the data, it’s an arbitrary value that says “how much should downstream nodes care about this node’s output?” That is, it starts off as arbitrary, but you train the AI by changing the weights.

The neural network is trained by trying to get the output close to some training data. For example, you might have some pictures which are either chihuahuas or muffins, and you show the network each picture in turn. The network outputs a number which should be close to 1 when it is shown a muffin, and close to 0 when it is shown a chihuahua. Call this number the muffinitude. Obviously, the muffinitude is 1 minus the chihuami. Over time, the network adjusts its weights so as to maximize the possibility that it guesses right.

How does this training work?

Think of each node that is connected to the final muffinitude result. Each node is contributing a certain amount to that result. A small increase in the node’s output would affect the muffinitude by a certain amount, pushing it towards muffin or chihuahua. If the increase pushes the result towards muffin, and the picture really was a muffin, then we should increase the muffinitude. If the picture really was a chihuahua, then we should decrease the muffinitude. From this, we can determine the gradient: how much would the result get more or less accurate if we added a little bit to the node’s weight, making it matter more to the final result?

In turn, think of the nodes that are connected to that node. Each of them, too, could change its output a little bit, and this would affect the node they are connected to, and that in turn would affect the final result. They too have gradients. And so on backwards, until we get to the inputs. By this process of backpropagation, you can work out how each input finally affects the result, via all the possible paths through the network. You can then increase, or decrease, the weights on the nodes so as to make the final muffinitude score more accurate. If you do this a little bit for each picture of a muffuahua, then eventually you will have a well-trained neural network, which can tell you whether to eat something or take it for walkies.

To summarize:

An AI is a network of nodes, connecting some data input to a final result.

The AI gets better by updating the weights on each node in the network. The gradient at each node is calculated by backpropagating the gradient from its outputs.

After enough training the AI hopefully gets very good at producing the right result.

If you want to see the details, there are lots of good web pages and Youtube videos.

Markets

Now let’s talk about markets.

You can think of a market as a network of firms producing goods and services. At one end of the network are raw inputs like land, labour, minerals and so on. At the other end of the network are consumers, who consume finished products like iPhones, wooden toys, medical services, and cat therapists. In between, each firm takes inputs and produces outputs. Some of these are final outputs: Apple takes human ingenuity plus plastic plus semiconductors and produces iPhones. Some of them are intermediate outputs: TSMC makes semiconductors from silicon, water, complex machines and human ingenuity.

How do markets satisfy consumer demand? Consumers pay money to the firms that they buy things from. If consumers wants more of something, they pay more money to the firms producing it. In turn, those firms use this money to buy more of their inputs and produce more next year. And the money propagates back to the firms mining raw inputs such as silicon, or to Stanford University which mines raw undergraduate and produces human ingenuity.

To sum up:

A market is a network of firms, connecting some raw inputs to a final result: consumer welfare.

The market gets better by updating the amount produced by each firm in the network. The demand for each firm’s inputs is changed by backpropagating the demand from its outputs. The backpropagation flows through the system as money.

After enough time, the market hopefully gets very good at producing the things that consumers want.

This is very handwavy and sketchy! I’ve missed out lots of things, like the need for capital, the existence of public goods and externalities, or the question of whether satisfying consumer demand is like maximizing a well-defined function (say, the utility function of a representative consumer). And I haven’t specified exactly how backpropagation works, either for neural networks (calculating derivatives via the chain rule), or for markets (firms choosing quantities or prices to maximize profit).

Nevertheless, part of my “bet” here is that a good enough economist and/or computer scientist could make this analogy rigorous, in the form of mathematical statements where markets and neural networks would be exactly the same when described formally in the right way. That would be cool, because then any proof about a neural networks would be a proof about a market, and vice versa. (There’s a bit more rigour in this Lesswrong post, by someone who had the same idea four years ago! It also has a useful comment talking about the limits to the analogy.)

Among us

My other claim is more practical and direct. If you are worried about AI taking over the world in future, and doing bad things, then you should be correspondingly worried about markets, which have already taken over the world.

Let’s pursue the metaphor a bit more.

What exactly do markets maximize? Whatever humans demand, i.e. whatever they are willing to pay for. From the start of the Industrial Revolution, this has included basic needs like food and clothing, but also addictive goods like coffee, tobacco, alcohol, opium and sugar, some of which are also very harmful. If people want something, the market will satisfy that want, irrespectively of whether it is good for them.

What is more, the market is a discovery device for human wants. Picture a neural network with many thousands of final inputs, each of which is an end-consumer good. Some of these have never been tried: the Sinclair C5, the Segway, the iPhone. Eventually the network will try producing all of them, and if there is enough demand it will put more weight on those goods and produce more. Our relationship with alcohol goes back thousands of years. Our relationship with the infinite scroll is much more recent, but apparently just as compulsive.

We have implemented an algorithm that will find whatever lever humans most want to pull, and whatever lever they just can’t stop pulling. We do not yet know what the most addictive thing is. Is there a chemical substance which you literally cannot stop wanting after taking it once? Eventually, the market will find it. Which onscreen pattern of pixels, of all the possible patterns, is hardest to look away from? 500 hours of video are uploaded every minute to Youtube, as a vast Chinese Room AI tries to find the answer.

What if there’s an Omega Pringle?

If I were a bad AI, I'd provide humans with everything they asked for.

Stretchy arrows

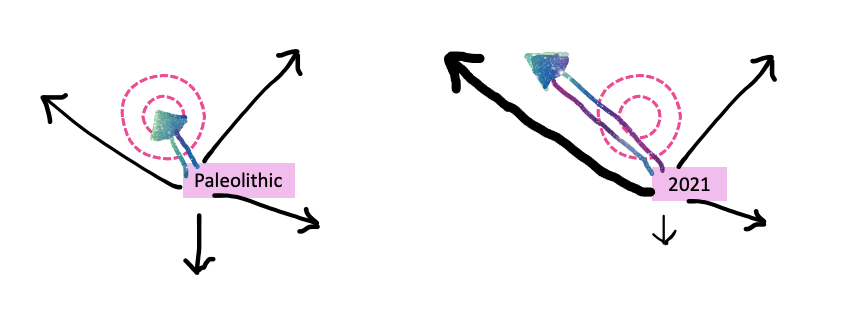

Elsewhere, I’ve described the “preferences” and “desires” theories of what humans want. The “preferences” view comes from economics, and says that human wants maximize human satisfaction, in any environment. The “desires” view comes from evolutionary psychology and says that human wants maximized human satisfaction on the Savannah, but now they don’t any more. The wants are the same but the environment has changed. We still want sweet stuff, but now there’s a lot of sweet stuff around our environment in appropriately-labelled cans, and it makes us fat and kills us. I used this picture to show the process:

Here’s another picture to expand on the idea.

The left hand picture shows us in the paleolithic. We have a bunch of different desires, the black arrows. Each desire is triggered more or less by the environment. Cold triggers us to want warmth. Heat triggers us to run into the shade. Given the paleolithic environment in total, if you add up the effects of all those desires, you take actions – represented by the cosmic rainbow arrow, which adds up the vectors from all the black arrows. You end up in the sweet spot, doing about as well as you can for a hunter-gatherer. That’s not a coincidence, it’s because evolution fixes our desires to get us to the sweet spot, in terms of fitness.

In 2021, the environment is different. You’re usually less cold and you don’t have to fight as much. There’s more Pringles around. Some of your desires are triggered more (the big black arrow on the left) so they pull you more. Others are triggered less (the little arrow at the bottom). You no longer end up in the sweet spot — though, of course, not being in the sweet spot as a modern person is still much better than even the Best Life of a caveman.

The market algorithm is designed to find the stretchiest arrow: the good that people most demand, or that people can be got to most demand.

Up to now this has been mostly good! There are lots of demands, for food and clothes and shelter and communication and finding a mate, which before were very unsatisfied and we have got better and better at satisfying over the past few centuries, all while improving real human welfare. You start off with the cotton gin, and you end up ordering Allbirds on the internet.

But there is no guarantee in the long run that all of the demands will be good. We might run out of good things. Some innovation in clothes over the past fifty years has probably made clothes better or cheaper, but a lot of it is about delivering fashion faster, which probably creates a treadmill effect: we all get more fashionable clothes quicker and then those clothes go out of fashion quicker, leaving us no better or worse off. And other innovations are just bad. Social media is not the first human invention to put our mental health at risk — cities probably do that, in some ways — but it might be the first human invention where putting our mental health at risk is kind of the point.

In other words, there are ways of specifying an underlying goal which work in one environment, but then fail catastrophically in another environment. AI alignment researchers may recognize this idea.

Intermediate agents

Modern neural networks don’t seem to be agents with goals and desires. But they seem to “contain” agents with goals and desires, in the sense that if you feed them the right prompt, they’ll start talking like such an agent. All GPT-3 tries to do is imitate all the text it has ever seen, but if you ask it “how might I smuggle a dirty bomb into Congress”, it will act like a helpful henchman to your villain, and suggest different ways to do that. If not fully agents, they are still agent-like, and that is a problem because they might be villain-like.

Markets are also not agents, but they also contain things that look like agents. Of course, literally, markets contain humans, but they also contain firms, which are agent-like: it makes sense to talk of a firm having goal X, planning X, having such-and-such a strategy and so forth. Some of these subnetworks of the market network have their own goals, which are not necessarily the same as maximizing the welfare of all consumers. You expect Volkswagen to make cars and sell them at competitive prices. You don’t expect it to create software that lies to emissions test machines, but heigh ho! That’s a way to maximize its profits. Again, AI researchers might recognize the idea of a subagent that finds unexpected, undesirable solutions to the problem you set it.

Between AI and markets

One goal of this essay is just to make you care about what I care about. If you are worried that AI research is creating beings that optimize for a goal which we don’t know about, and which potentially could be bad, then you should be worried that we have already created one such being, granted it a lot of power, and come to rely on it. Effective altruists should consider the potential for our existing economic system to harm humans’ long-run survival prospects. I first saw this idea in an edge.org essay from 2006.

Another part is to point out analogies between the two sets of problems. There may be literal formal mappings between markets and neural networks. More broadly, there might be ways of thinking about markets that help us understand the problems of AI, and vice versa.

Lastly, it’s worth thinking about what happens when these two different, but analogous, systems meet. That is happening already:

Algorithms already price much of what we buy, the news we are exposed to, and what we see when we search. There are well-known problems with the results. This is the “low end” of dangerous AI, 2022: Facebook optimized for clicks, and it turns out this was optimizing for rage and depression. In future we can expect those algorithms to get cleverer and more AI-driven.

At the high end, concerns about true AGI (artificial general intelligence, the kind that is as smart as a human) are embedded in the market context of tech, from large monopolists like Google to fast-paced startups.

So if you want a way bad AI might take over the world, it makes sense to start with trends that already exist.

If you enjoyed this article, you might like my book Wyclif’s Dust: Western Cultures from the Printing Press to the Present. It’s available from Amazon as a paperback/hardback/ebook, and you can read more about it here.

You can also subscribe to this newsletter (it’s free):

Or share this post:

I’ve been using this framework for a long time. I worked on datacenter reliability at Google. Learning how shitty hardware is made me think that an AGI would likely keep us around as a cheap mechanism for repairing and growing itself. The more you serve the machine, the more it pays you.

I think it’s definitely worth asking, “ok but what is it optimizing for?” I also suspect that it’s already wireheaded itself by means of money printing.